IBM, Stone Ridge Technology, Nvidia Break Supercomputing Record

It’s no secret that GPUs are inherently better than CPUs for complex parallel workloads. IBM’s latest collaborative effort with Stone Ridge Technology and Nvidia shined a light on the efficiency and performance gains for reservoir simulations used in oil and gas exploration. The oil and gas exploration industry operates on the cutting edge of computing due to the massive data sets and complex nature of simulations, so it is fairly common for companies to conduct technology demonstrations using the taxing workloads.

The effort began with 30 IBM Power S822LC for HPC (Minsky) servers outfitted with 60 IBM POWER8 processors (two per server) and 120 Nvidia Tesla P100 GPUs (four per server). The servers employed Nvidia’s NVLink technology for both CPU-to-GPU and peer-to-peer GPU communication and utilized Infiniband EDR networking.

The companies conducted a 100-billion-cell engineering simulation on GPUs using Stone Ridge Technology’s ultra-scalable ECHELON petroleum reservoir simulator. The simulation modeled 45 years of oil production in a mere 92 minutes, easily breaking the previous record of 20 hours. The time savings are impressive, but they pale in comparison to the hardware savings.

ExxonMobil set the previous 100-billion-cell record in January 2017. ExxonMobil’s effort was quite impressive–the company employed 716,800 processor cores spread among 22,400 nodes on NCSA’s Blue Waters Super Computer (Cray XE6). That setup requires half a football field of floor space, whereas the IBM-powered systems fit within two racks and occupy roughly half of a ping-pong table. The GPU-powered servers also required only one-tenth the power of the Cray machine.

The entire IBM cluster weighs in at roughly $1.25 to $2 million depending upon the memory, networking and storage configuration, whereas the Exxon system would cost in the hundreds of millions of dollars. As such, IBM claims it offers faster performance in this particular simulation at 1/100th the cost.

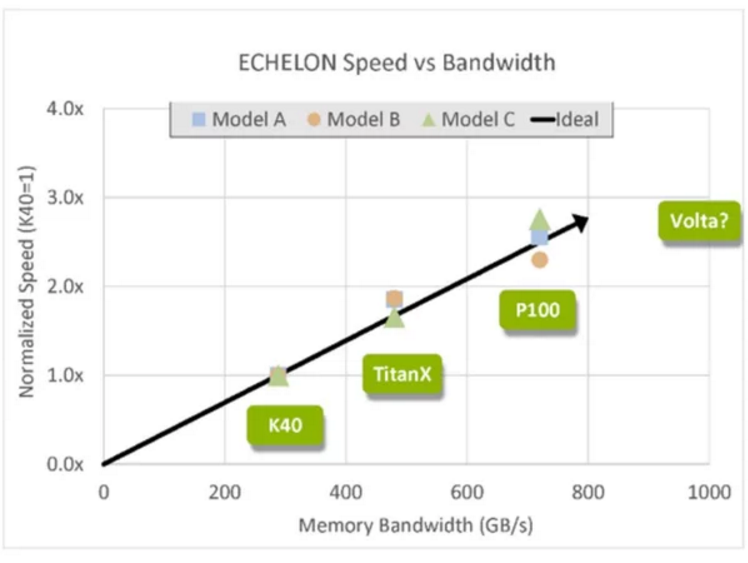

Such massive simulations are rarely used in the field, but it does highlight the performance advantages of using GPUs instead of CPUs for this class of simulation. Memory bandwidth is a limiting factor in many simulations, so the P100’s memory throughput is a key advantage over the Xeon processors used in the ExxonMobil tests. From Stone Ridge Technology’s blog post outlining its achievement:

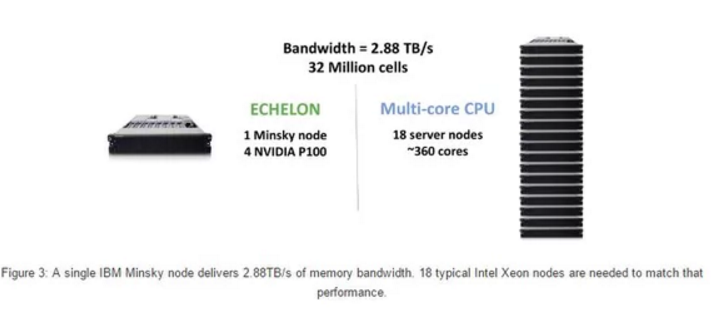

“On a chip to chip comparison between the state of the art NVIDIA P100 and the state of the art Intel Xeon, the P100 deliver 9 times more memory bandwidth. Not only that, but each IBM Minsky node includes 4 P100’s to deliver a whopping 2.88 TB/s of bandwidth that can address models up to 32 million cells. By comparison two Xeon’s in a standard server node offer about 160GB/s (See Figure 3). To just match the memory bandwidth of a single IBM Minsky GPU node one would need 18 standard Intel CPU nodes. The two Xeon chips in each node would likely have at least 10 cores each and thus the system would have about 360 cores.”

Xeons are definitely at a memory throughput disadvantage, but it would be interesting to see how a Knight’s Landing-equipped (KNL) cluster would stack up. With up to 500 GBps of throughput from on-package Micron HBM, they easily beat Xeon’s memory throughput. Intel also claims KNL offers up to 8x the performance-per-Watt compared to Nvidia’s GPUs, and though it’s noteworthy that comparison is against previous-generation Nvidia products, that’s a big gap that likely wasn’t closed in a single generation.

IBM feels that its Power Systems paired with Nvidia GPUs can help other fields, such as computational fluid dynamics, structural mechanics, and climate modeling, among others, to reduce the amount of hardware and cost required for complex simulations. The massive nature of this simulation is hardly realistic for most oil and gas companies, but Stone Ridge Technology has also conducted 32-million-cell simulations on a single Minsky node, which might bring an impressive mix of cost and performance to bear for smaller operators.