Google’s Edge TPU Machine Learning Chip Debuts in Raspberry Pi-Like Dev Board

Google has officially released its Edge TPU (TPU stands for tensor processing unit) processors in its new Coral development board and USB accelerator. The Edge TPU is Google’s inference-focused application specific integrated circuit (ASIC) that targets low-power “edge” devices and complements the company’s “Cloud TPU,” which targets data centers.

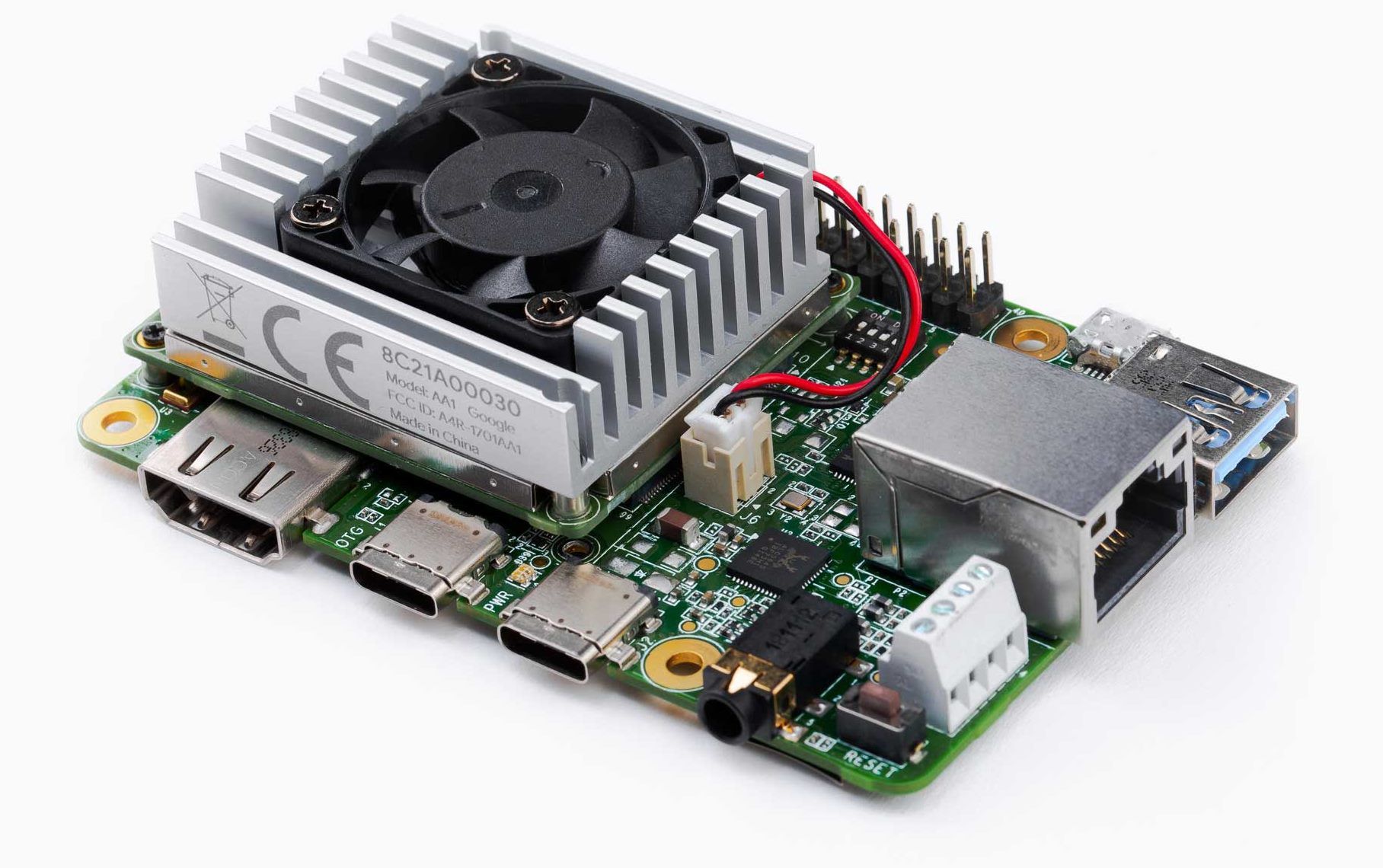

Coral Dev Board With Edge TPU

Last July, Google announced that it’s working on a low-power version of its Cloud TPU to cater to Internet of Things (IoT) devices. The Edge TPU’s main promise is to free IoT devices from cloud dependence when it comes to intelligent analysis of data. For instance, a surveillance camera would no longer need to identify objects it sees in real-time through cloud analysis and could instead do so on its own, locally, thanks to the Edge TPU.

Google has now made available for developers a Raspberry Pi-style development board that comes with a quad-core Arm Cortex-A53 CPU, an Arm Cortex-M4F real-time core and a Vivante GC7000 Lite GPU — all of which are connected to Google’s Edge TPU co-processor, capable of up to 4 trillion operations per second (TOPS).

The board also ships with 1GB of LPDDR4 RAM, 8GB of eMMC (embedded MultiMediaCard) memory, Wi-Fi 2×2 MIMO (802.11b/g/n/ac 2.4/5GHz bands) and Bluetooth 4.1. It supports Debian Linux and the TensorFlow Lite machine learning software framework.

Coral USB Accelerator

Google also revealed a USB accelerator, which is similar to Intel’s Neural Compute Stick. However, just like the Coral dev board, the Coral USB Accelerator also comes with an embedded Edge TPU. It’s supposed to have low power demand and comes in a more accessible form to developers, who can connect it to other computers or even other boards, such as a Raspberry Pi, to give them a machine learning performance boost.

The Coral USB Accelerators also comes with a Cortex-M0+ microcontroller clocked at 32MHz, 16KB of flash memory and 2KB of RAM. It can connect to other devices via a USB Type-C connector that supports 5Gb/s. The USB Accelerator, which also supports TensorFlow Lite.

Google’s TPUs

Back in 2016, Google surprised the world with its own machine learning-focused processor called a “tensor processing unit.” According to Google, the TPU was up to 30 times faster and more efficient than other CPUs and GPUs for certain popular machine learning training and inference applications. This is because the chip was designed from the beginning with machine learning in mind.

Later on, Nvidia also started integrating “tensor cores” into its GPUs, shrinking the disparity between the two architecture types significantly. However, Google’s latest Cloud TPUs still seems to have some advantages in terms of performance and price over Nvidia’s GPUs.

One of the primary reasons for open sourcing the TensorFlow framework, which Google was using internally for its own machine learning projects and later to develop hardware optimized for it, was that this enabled an entire ecosystem of developers and projects that essentially kept improving Google’s software.

Even third-parties, including Nvidia, have started building hardware that works well with the TensorFlow framework. Now that Google has started selling highly optimized TensorFlow chips for the booming IoT industry, the TensorFlow framework is likely to become even more popular.